Legal Innovation & Technology Lab

@ Suffolk Law School

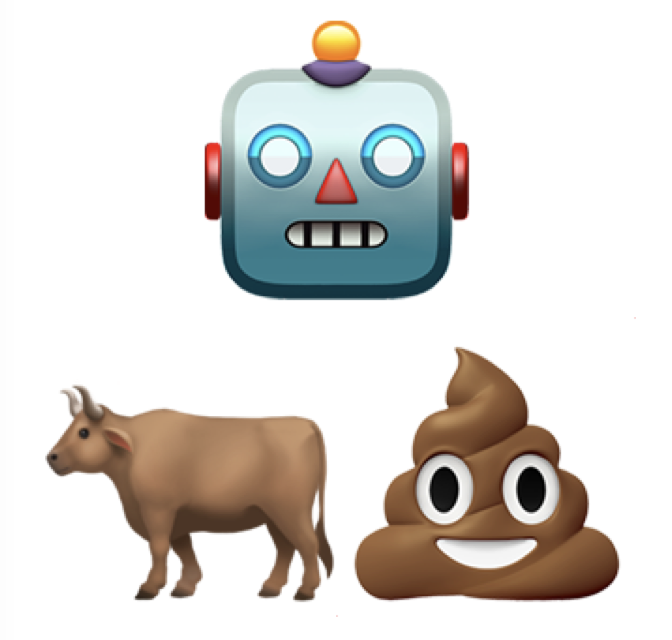

BS with an AI about what clinic you should take

Algorithmic BS?

Ask the bot below about Suffolk's clinics, but keep in mind...

The modern authoritarian practice of “flood[ing] the zone with shit” clearly illustrates the dangers posed by bullshitters—i.e., those who produce plausible sounding speech with no regard for accuracy. Consequently, the broad-based concern expressed over the rise of algorithmic bullshit is both understandable and warranted. Large language models (LLMs), like ChatGPT, which complete text by predicting subsequent words based on patterns present in their training data are the embodiment of such bullshitters. They are by design fixated on producing plausible sounding text, and since they lack understanding of their output, they cannot help but be unconcerned with accuracy. Couple this with the fact that their training texts encode the biases of their authors, and one can find themselves with what some have called mansplaining as a service.

So why did the LIT Lab use an LLM to build this tool, and why bother working with a known bullshitter?

For one, algorithmic BS artists lack agency. They do not understand their output. Their "dishonesty" is a consequence of their use case, not their character. Context matters and this can change from use case to use case. That's not the end of it though because context includes how a tool is made. What's clear, however, is that any moral duty present lies with the developers of a tool. By stepping into this role we explore these questions at the Lab, asking if such technology can have justifiable pro-social uses. When asked to complete a text prompt, LLMs default to constructing sentences that resemble their training data. This can lead to "confabulations." These models, however, need not operate in isolation. One can work to mitigate the problem of BS by providing context, e.g., by sourcing reliable texts and asking for output based on these. Such is the trick used here. We have asked an LLM (GPT-3) to reorder the contents of Suffolk's Clinical Information Packet in response to your questions. This of course does not limit the danger posed by such tools driving the cost of BS production to nearly zero, and it does not guarantee accuracy. It does, however, hint at the possibility of pro-social uses. Imagine if congressional staffers could ask questions of a 2,000-page bill published hours before a vote using natural language. If answers were coupled with cites to relevant sections, as we do below, this could help them quickly zero in on sections of interest when time is of the essence.

The problem of BS, however, is not the only issue faced by such models. When they allow end-users to provide their own prompts, they are subject to prompt injection, the careful crafting of inputs to override a tool's original instructions. What could go wrong? Oh no . . . you're going to try this below aren't you?

Mostly, we hope you will find this a novel way to engage with the information packet. And, if you would like to get your hands dirty creating, evaluating, and understand the legal implications of such tools, we suggest you consider enrolling in Coding the Law (offered in the fall) or the Legal Innovation & Technology (LIT) Clinic (open to 2Ls and 3Ls). Which brings is to the real reason one might consider working with this particular BS artist—because practice experience working with a tool leads to understanding, and understanding is power. That power is something you can leverage both for the benefit of your clients and practice. We don't expect our students to become production coders, but we'd like them to learn enough to call BS. And yes, this is largely a gimmick to get you interested in the Lab, but we also think it can answer a few of your questions. ;)

Remember, whatever the bot says, the universal clinical application is due Thursday, February 23 before 5pm Eastern.

Check cited sources before believing any answers below.

Remember, often folks can read what you write online. Learn more

--- OR ---

† The bot's sources include not only the packet but select content on or linked to from www.suffolk.edu/law and suffoklitlab.org. When an answer is found, the sources considered in constructing that answer are shown in the Sources section below each answer.